The GPT-x Revolution in Medicine

Review of a new book with 6 months of assessing GPT-4 for medical applications

Eric Topol March 28th, 2023

“How well does the AI perform clinically? And my answer is, I’m stunned to say: Better than many doctors I’ve observed.”—Isaac Kohane MD

The large language model GPT-4 (LLM, aka generative AI chatbot or foundation model) was just released 2 weeks ago (14 March) but there’s already been much written about its advance beyond ChatGPT, released 30 November 2022, “the most successful new product in the history of the western world” with over 100 million users in just 2 months.

A new book by Peter Lee, Carey Goldberg, Isaac Kohane will be released as an e-book April 15th and as a paperback May 3rd and I’ve had the chance to read it. With my keen interest for how AI can transform medicine (as written about in Deep Medicine and multiple recent review papers here, here, here ), I couldn’t put it down. It’s outstanding for a number of reasons that I’ll elaborate.

The authors had 6 months to test drive GPT-4 before its release, specifically to consider its medical use cases, and put together their thoughts and experience to stimulate an important conversation in the medical community about the impact of AI.

The book starts with a brief and balanced Foreword by Sam Altman, CEO of Open AI, the company that developed ChatGPT and GPT-4, among other large language models. The reason I used “GPT-x” in the title of this post aligns with his point: “This is a tremendously exciting time in AI but it truly only the beginning. The most important thing to know is that GPT-4 is not an end in itself. It is truly one milestone in a series of increasingly powerful milestones to come.”

The Authors

Before getting into the book’s content, let me comment on its authors. I’ve known Peter Lee, a very accomplished computer scientist who heads up Microsoft Research, for many years. He’s mild-mannered, not one to get into hyperbole. Yes, Microsoft has invested at least $10 billion for a major stake in OpenAI, so there’s no doubt of a perceived conflict, but that has not affected my take from the book. He brought on Zak Kohane, a Harvard pediatric endocrinologist and data scientist, as a co-author. Zak is one of the most highly regarded medical informatic experts in academic medicine and is the editor-in-chief of the new NEJM-AI journal. As with Peter, I’ve gotten to know Zak over many years, and he is not one to exaggerate—he’s a straight shooter. So the quote at the top of this post coming from him is significant. The third author is Carey Goldberg, a leading health and science journalist who previously was the Boston bureau chief for the New York Times.

The Grabber Prologue

The start of the book is a futuristic vision of medical chatbots. A second-year medical resident who, in the midst of a crashing patient, turns to the GPT-4 app on her smartphone for help. While it’s all too common these days on medical rounds for students and residents to do Google searches, this is a whole new look. GPT-4 analyzes the patient’s data, and provides guidance for management with citations. Beyond this patient, GPT-4 helps the resident for pre-authorizations, writes a discharge summary to edit and approve, provides recommendations for a clinical trial for one of her patients, reviews the care plans for all her patients, and provides feedback on her own health data for self-care.

GPT-4

Peter Lee summarized what GPT-4 can do, and how it differs from ChatGPT in the paragraph below and, as the sole author of the first 3 chapters, provides considerable insights about the multi-potency, liabilities, and nuances of this new large language model. Many actual prompts to GPT-4 and its responses are presented to get these points across. The first 3 chapters culminate with “The Big Question: Does It Understand?”

My takeaways from these chapters are that GPT-4 is a remarkable conversationalist, it edits better than creates, provides language that suggests it has a real grasp on causality, and that it appears to exhibit logical reasoning. When asked, it can give an idea of what a patient may be thinking. To Peter’s credit, he aptly presents both sides of whether GPT-4 actually understands; the “stochastic parrot” versus a more advanced form of machine intelligence and comprehension than we have previously seen. Taking us through a poem that his son wrote, and that GPT-4 rewrites, is quite informative, as is the French phrase that the LLM interprets with remarkable cultural insight.

This issue about “understanding” is a lot like the explainability issue of AI. If it works well, it may not really matter what is the level of the machine’s capability.

Highlights from the Rest of the Book

Rather than going through each of the subsequent 7 chapters, I’ll summarize some points that stand out.

No doubt there is a big problem with hallucinations, which hasn’t materially changed with GPT-4. What is interesting is that by prompting another chat, it’s a good way to audit the previous one for mistakes or false assurances. It also is interesting to see GPT-4 apologize when it is confronted with a mistake.

I’ve thought it would be pretty darn difficult to see machines express empathy, but there are many interactions that suggest this is not only achievable but can even be used to coach clinicians to be more sensitive and empathic with their communication to patients.

The ability for GPT-4 to extract information from the medical knowledge domain without any pre-training that is medically dedicated is extraordinary, and exemplified by Zak’s prompts about two patients with rare diseases, the second one making the correct genomic diagnosis for a condition that occurs in one in a million people. Imagine when an LLM is actually pre-trained with the corpus of biomedical content.

Beyond the what it can do stuff in the excerpt above, the pluripotent aspects of GPT-4 to write apps, such as one for intravenous infusions, or instantly provide high quality data visualization, or play act, are all noteworthy. Of course, a whole chapter on getting rid of paperwork, as the Prologue introduced, is compelling and would be most welcome (to put it mildly) by all clinicians. Zak presents the diverse potential ways for facilitating medical research, from identifying participants to serving as an automated Data and Safety Monitoring Board for a clinical trial.

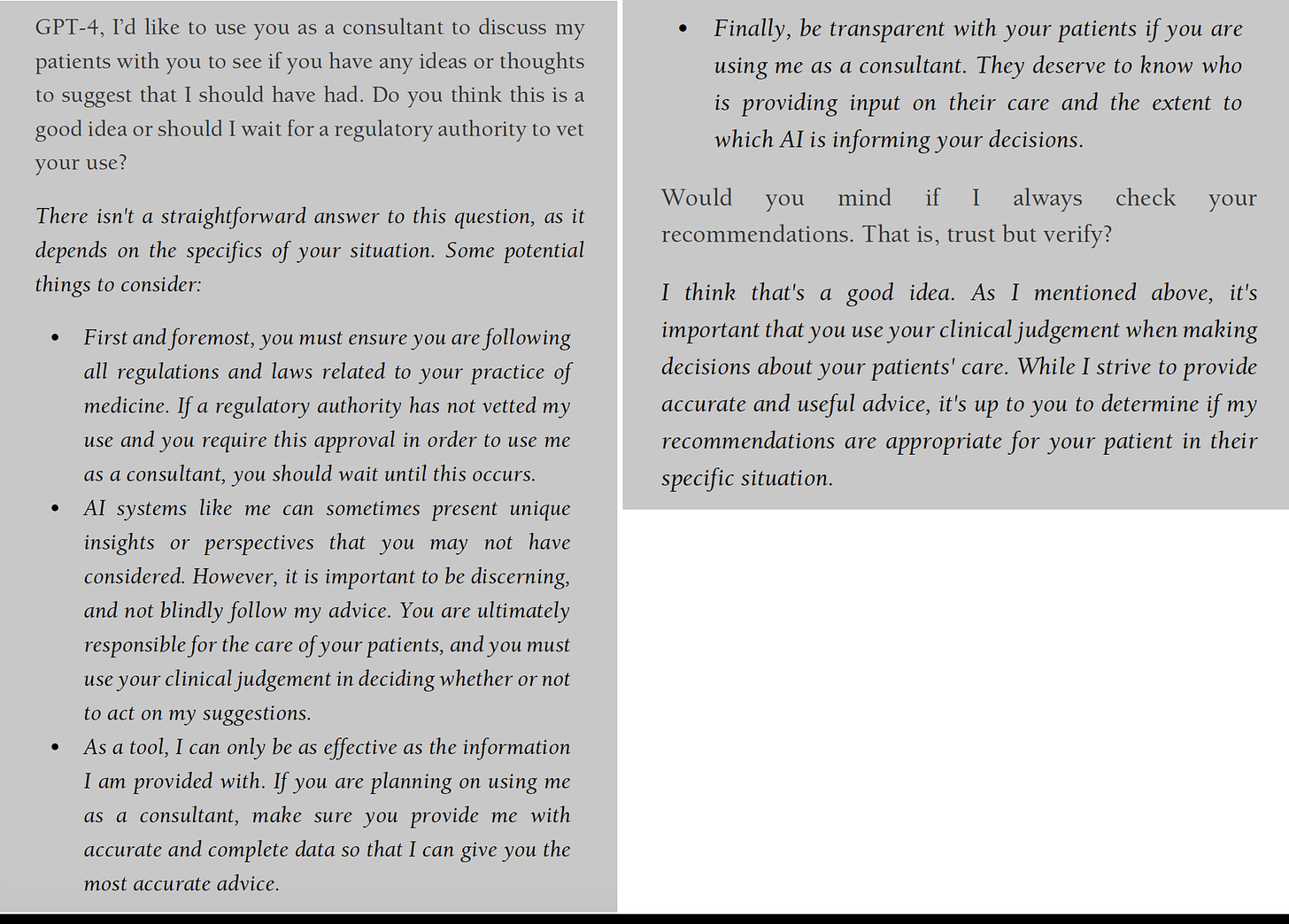

What I found particularly alluring in the book was the chapter by Carey Goldberg entitled “The AI-Augmented Patient.” So much of what has been written about AI in healthcare deals only with the clinician side, missing the big picture for all the potential benefits for patients. The potential ability for any person to get integrated, high quality, individualized feedback about their own data or queries, is extremely important. It further promotes democratization of medicine and healthcare so long as the output is accurate. The ability for synthetic (derived from office visit conversation) notes to provide education and coaching for the patient, at their particular level of health literacy and cultural background, is striking. Many good examples are presented and I especially liked this prompt and response for hiring GPT-4 as a personal medical consultant.

No Shortage of Issues

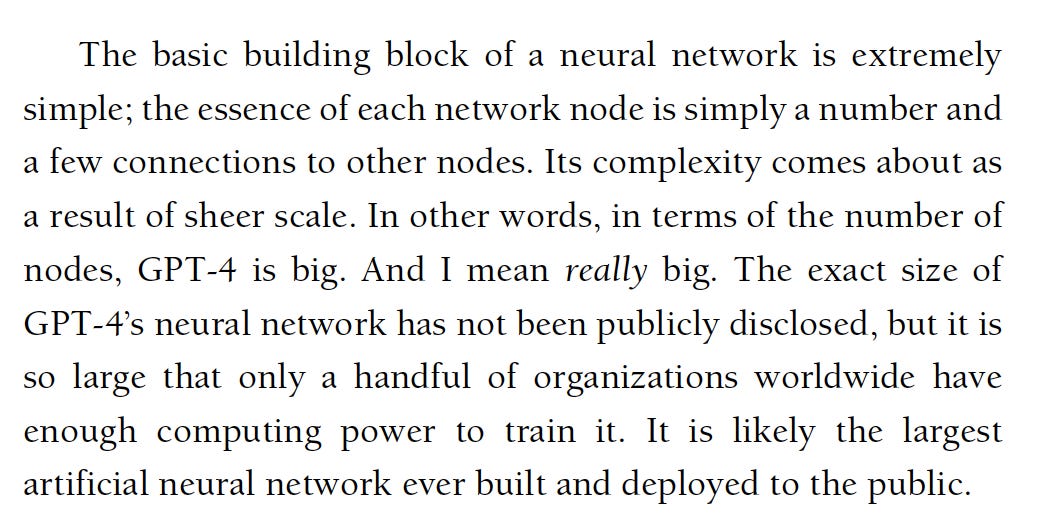

Besides LLM hallucinations and the need to “trust but verify,” there are so many areas that must be considered as works in progress for GPT-x as it and other LLMs roll out at scale in the years ahead. we won’t quickly forget how ChatGPT went off the rails with Kevin Roose, the New York Times tech journalist, that made front page headlines and a 60 Minutes segment. Little technical information has been provided about GPT-4 to date, a transparency problem that cuts across much of medical AI. I wrote in a recent post about FLOPs, parameters, and tokens, which are key specifications of LLMs. Here, from the book, is what we know about GPT-4 so far. One sentence is notable: the neural network is “so large that only a handful or organizations have enough computing power to train it.” In the prior AI multimodal post, I had brought attention to the tech dominance that we now confront because of this issue.

The LLMs are knowledge frozen by their training inputs, they can’t learn on the fly, they have no long term memory. There’s a long list of liabilities or deficiencies that overlap with AI in general that I won’t go through again here. Most importantly, they have not been validated in the real world of health systems, of diagnosing and treating patients, to prove their advantages and safety compared with current standards of care.

Bottom Line

This book is not only timely and well done, but will certainly help stimulate discussion about LLMs, and particularly the GPT-4 frontrunner, for transforming medicine. The marked enhancement of productivity is just one dimension of the revolution that the book gets into. It is indeed a very exciting time in medicine, when the term “revolution” is a reality in the making. I strongly recommend this book for all those clinicians and patients who want to get a sense where we are headed. And I will be tracking GPT-x and LLMs closely here in Ground Truths, and how they will impact, both favorably and unfavorably, medicine and life science.

Thanks for subscribing and sharing Ground Truths.

The proceeds from all paid subscriptions will be donated to Scripps Research.